In decentralized storage, the core challenge is not where data lives—it is how data survives. Nodes go offline, networks fragment, and participants behave unpredictably. Traditional systems respond to this uncertainty by copying data again and again. Walrus takes a more deliberate path. Instead of replication, it relies on erasure coding to achieve durability, availability, and cost efficiency at the same time.

Erasure coding is not a new concept, but Walrus applies it in a way that is tightly aligned with decentralized incentives and on-chain verification.

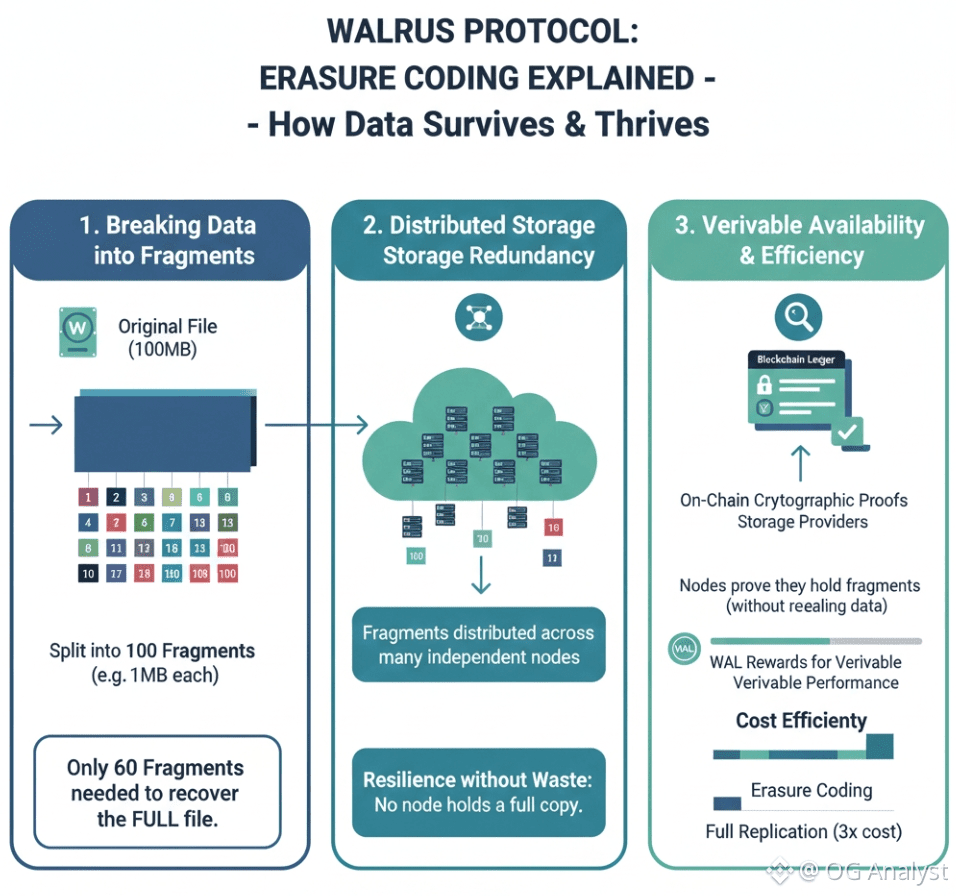

Breaking data into meaningfully redundant parts

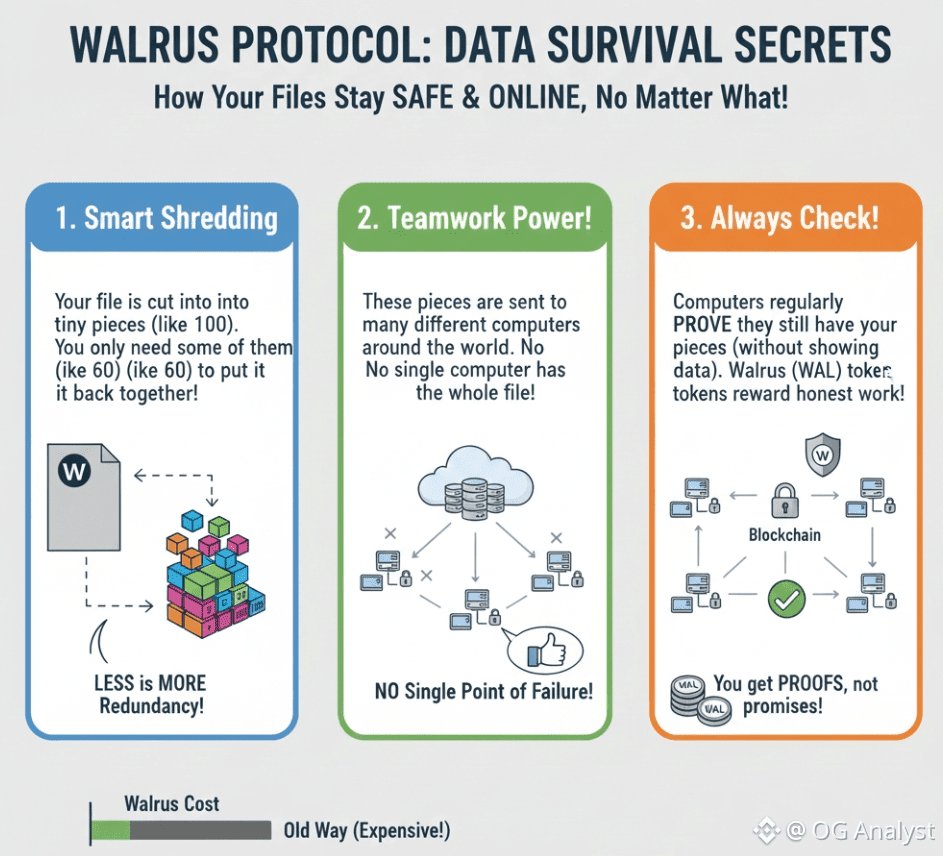

When data is uploaded to Walrus, it is not stored as a single object or copied wholesale across nodes. Instead, the data is mathematically transformed into many smaller fragments. These fragments are generated such that only a subset of them is required to reconstruct the original file.

For example, a file might be split into 100 fragments, while requiring only 60 to recover the full data. The remaining fragments act as redundancy—not as identical backups, but as mathematically linked pieces. This is the essence of erasure coding: resilience without waste.

Distribution without dependence on specific nodes

Once encoded, fragments are distributed across a decentralized network of storage providers. No single node holds a complete copy of the data, and no small group of nodes becomes indispensable.

This design choice matters. In replicated systems, the loss of specific replicas can degrade performance or force emergency recovery. In Walrus, fragments are interchangeable. As long as enough fragments remain accessible, the data remains intact. This makes the system naturally tolerant to churn, outages, and uneven participation.

Verifiable availability instead of blind trust

Erasure coding alone is not sufficient in a decentralized environment. Walrus pairs it with cryptographic commitments and on-chain proofs that allow the network to verify that storage providers are actually holding their assigned fragments.

Providers must periodically demonstrate availability without revealing the underlying data. This keeps the system honest while preserving privacy. WAL incentives are tied to these proofs, ensuring that efficiency does not come at the cost of accountability.

Cost efficiency through reduced duplication

The economic advantage of erasure coding becomes clear when compared to full replication. Storing three full copies of a dataset triples storage costs. Erasure coding achieves comparable—or higher—fault tolerance with significantly less raw storage.

For users, this means lower long-term storage fees. For the network, it means less hardware redundancy is required to support the same level of reliability. WAL acts as the unit of exchange that prices this efficiency transparently.

Scalability that improves with network size

As more storage providers join Walrus, erasure coding becomes more effective, not less. Fragment distribution can be spread across a wider set of participants, reducing concentration and improving resilience.

This creates a positive feedback loop: increased participation strengthens both decentralization and cost efficiency. Unlike replicated systems that become expensive at scale, Walrus benefits from scale structurally.

A system designed for imperfect conditions

Perhaps the most important aspect of erasure coding in Walrus is philosophical rather than technical. The protocol does not assume ideal behavior or constant uptime. It assumes partial failure as the norm and designs around it.

By combining erasure coding with cryptographic verification and WAL-based incentives, Walrus turns unreliable components into a reliable system—without central coordination.

Conclusion

Within the Walrus protocol, erasure coding is not just a storage optimization; it is the foundation of the network’s efficiency and resilience. By transforming data into recoverable fragments, distributing them widely, and verifying availability on-chain, Walrus delivers durable storage at lower cost and higher decentralization. It is a practical response to the realities of decentralized infrastructure, engineered for longevity rather than convenience.