Building AI agents on Sui often sparks doubts about data handling. Walrus protocol addresses these head-on by providing verifiable storage for datasets and models. This deep-dive debunks common myths, showing how Walrus enables reproducible AI workflows with WAL token utilities baked in.

Myth 1: Onchain AI Lacks Data Integrity

Skeptics claim blockchain storage corrupts AI data over time. Walrus counters this with cryptographic commitments. Each blob upload generates a unique ID and proof, verifiable on Sui. This ensures datasets remain tamper-proof, tying directly to WAL payments for sustained availability.

Fact 1: Verifiable Proofs in Action

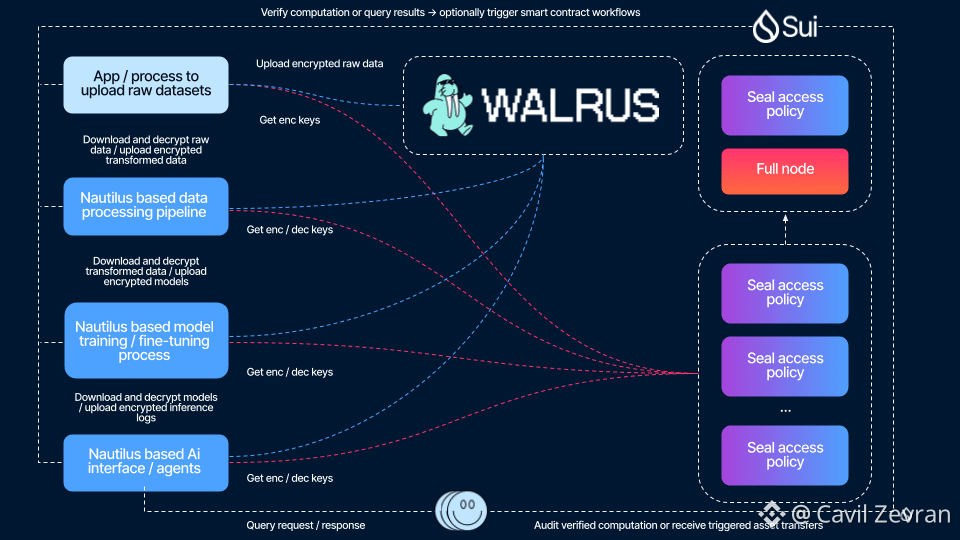

Walrus uses availability proofs to confirm data integrity. Nodes store erasure-coded slivers; clients sample to verify. On Sui, smart contracts enforce these proofs, making AI agent inputs reproducible. WAL tokens reward honest nodes, penalizing failures through burns per official sources.

Myth 2: Reproducibility Suffers in Decentralized Setups

Centralized clouds offer easy replication, but decentralized? Many think it's chaotic. Walrus structures data as programmable Sui objects, allowing agents to reference exact versions. This maintains workflow consistency across runs.

Fact 2: Programmable Data for Repeatable Outputs

Through Move integration, Walrus blobs become objects with metadata. AI agents query these for fixed datasets, ensuring identical results. WAL facilitates access fees, distributed to stakers for network upkeep.

Myth 3: Workflows Break Under Scale

High-volume AI tasks supposedly overwhelm onchain storage. Walrus's RedStuff coding distributes load, supporting agent swarms without bottlenecks. This scales reproducibility without central chokepoints.

Fact 3: Erasure Coding for Durable Access

RedStuff splits data into matrix-based slivers, tolerating up to 1/3 node failures. Agents retrieve via quorums, keeping workflows intact. WAL incentives align node performance with ecosystem reliability.

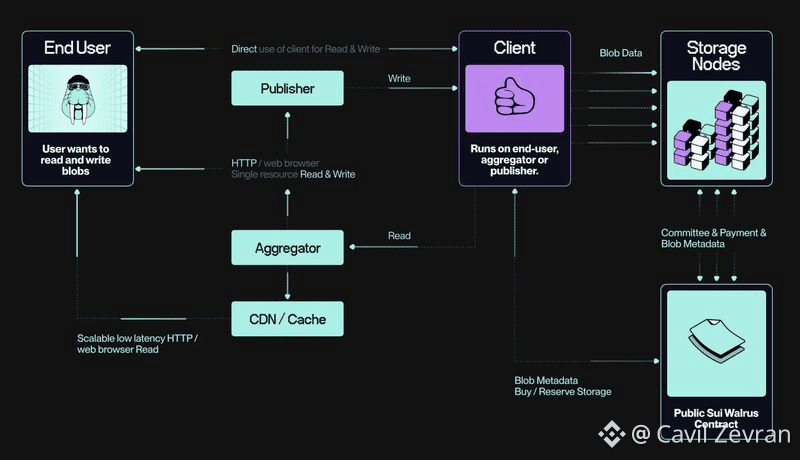

Key Workflow Components

- Dataset anchoring: Store AI training data as blobs for verifiable IDs.

- Model persistence: Keep inference models on Walrus for agent recall.

- Memory management: Use blobs for agent state, enabling multi-step reproducibility.

- Payment rails: WAL handles storage epochs and access.

Blueprint Walkthrough: Building an AI Agent Workflow

Here's a step-by-step guide to deploying a reproducible AI agent on Sui using Walrus:

1. Prepare data: Format your AI dataset as a blob, ensuring it's under Walrus's 1GB limit per upload.

2. Upload to Walrus: Use the CLI to store the blob, specifying epochs paid in WAL; receive a blob ID.

3. Integrate with Sui: Mint a Move object linking the blob ID, adding metadata for integrity checks.

4. Define agent logic: Write a smart contract that references the blob for inputs, enforcing proof verification.

5. Execute workflow: Trigger the agent to fetch data via Walrus APIs, process in Sui transactions.

6. Verify reproducibility: Run audits by resampling proofs, confirming outputs match prior runs.

7. Manage incentives: Pay WAL for extensions; nodes earn shares for maintaining data.

Ecosystem Ties to WAL Utility

Walrus workflows rely on WAL for payments and penalties. Users stake WAL to operate nodes, earning from storage fees. This loop supports AI agent scalability within the ecosystem.

Risks & Constraints

- Network congestion on Sui could delay blob retrievals during peak agent activity.

- WAL volatility impacts cost predictability for long-term dataset storage.

- Proof sampling requires computational overhead, limiting very low-power agents.

Walrus demystifies AI agents on Sui by enforcing data integrity and reproducibility. WAL tokens underpin the incentives, making workflows sustainable. This blueprint opens reliable automation.

In what ways could verifiable datasets reshape your AI agent designs on Walrus?