We exist in an industry defined by a single, powerful word: permanence. We tell newcomers that the blockchain is immutable. We tell institutions that the ledger is eternal. We tell ourselves that once we mint a token or publish a contract, it is etched into the digital bedrock of history, safe from censorship, decay, or deletion. This is the primary narrative of the crypto ecosystem in 2026, and for the vast majority of users, it is a comfortable, reassuring lie.

The uncomfortable truth we avoid discussing at conferences is that most of what we call "Web3" is simply Web2 with a wallet connection. While the ownership of your asset lives on a decentralized ledger, the asset itself—the high definition image, the complex frontend interface, the sprawling data set for that on chain game—is almost certainly living on a server farm in Virginia owned by a massive centralized corporation. We have built a financial system that claims to be trustless, yet it relies entirely on the continued benevolence and billing cycles of Amazon Web Services, Google Cloud, or Microsoft Azure.

If you pause to look closely at the architecture of our current ecosystem, you will see the cracks in this facade. When a centralized cloud provider changes its terms of service, or when a project runs out of funding to pay its monthly server costs, the "immutable" asset vanishes. The token remains in your wallet, cryptographically secure and utterly useless, pointing to a URL that now returns a 404 error code. This is not a theoretical risk. We have seen it happen with early NFT projects, with decentralized social media prototypes, and even with the governance interfaces of major DeFi protocols. The logic is decentralized, but the memory is not.

This fragility exists because we have fundamentally misunderstood the limitations of our own technology. Layer 1 blockchains like Ethereum, Solana, and even high throughput networks like Sui are designed to be excellent processing units. They are the CPUs of this global computer. They are optimized to order transactions, verify signatures, and update balances with extreme speed and security. However, they are catastrophically bad at storage. Trying to store a gigabyte of data on a Layer 1 blockchain is the economic equivalent of trying to store your family photo album in the high speed cache of a computer processor. It is technically possible, but it will cost you a fortune and clog the entire system for everyone else.

Because of this cost, developers have been forced into a dangerous compromise. They store the "important" stuff (the money) on chain and the "heavy" stuff (images, code, data) on centralized servers. This breaks the promise of the decentralized web. It creates a kill switch for any application. If you can shut down the frontend interface or delete the data source, it does not matter that the smart contract is censorship resistant. The application is dead.

This structural failure is the exact reason the Walrus protocol exists. It is not another blockchain trying to compete for liquidity or users. It is a fundamental rethinking of how we handle the "memory" of the decentralized internet. Walrus recognizes that we cannot keep putting band aids on this problem with pinning services or temporary caches. We need a dedicated, incentivized, and mathematically proven layer for storing "blobs" large, unstructured binary objects that offers the same guarantees of permanence as the blockchain itself, without the crushing economic weight of full replication.

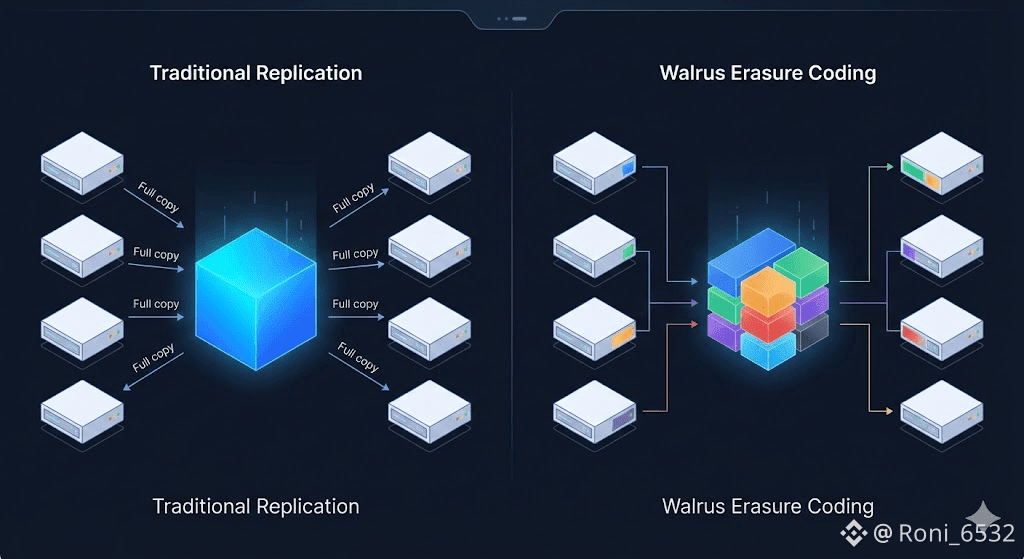

To understand why Walrus is the necessary answer to this crisis, you have to understand the math of "Red Stuff." In a typical blockchain or naive storage network, if you want to ensure a file survives, you copy it. You might store ten full copies of a file on ten different nodes. If one node goes down, you have nine others. This works, but it is incredibly inefficient. You are paying for ten times the storage you actually use. As the network grows, this redundancy becomes a financial black hole.

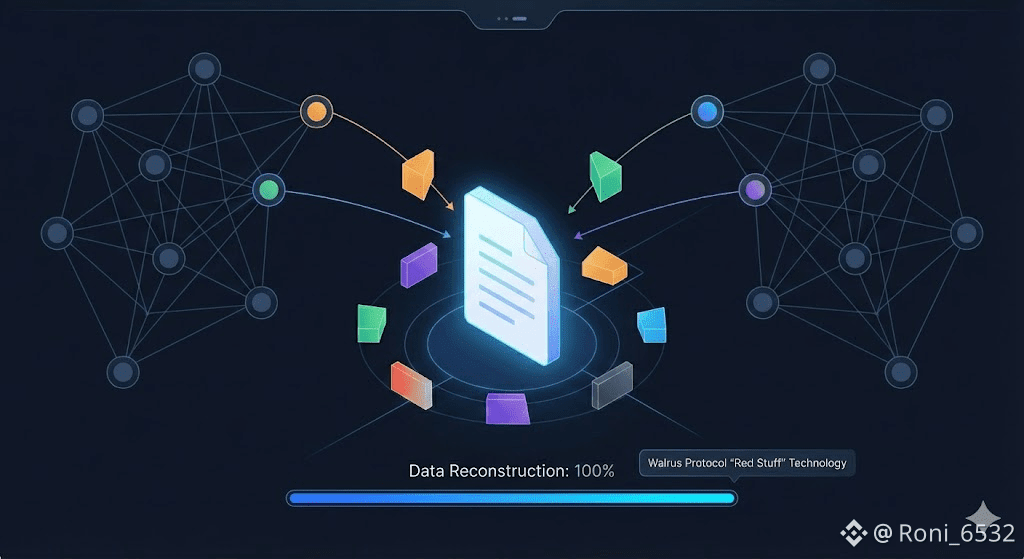

Walrus takes a different approach using two dimensional erasure coding, a technique the team calls Red Stuff. Instead of copying the file ten times, the protocol breaks the file into a mathematical grid of "slivers." These slivers are encoded in such a way that you do not need all of them to get your data back. You might only need a fraction of the total shards to reconstruct the original file perfectly. This allows the network to offer extreme durability mathematically guaranteeing the data survives even if a large percentage of nodes go offline while only requiring a storage overhead of perhaps four or five times the file size, rather than ten or twenty.

This efficiency is not just about saving money; it is about enabling scale that was previously impossible. Consider the rise of decentralized artificial intelligence. In 2026, the intersection of crypto and AI is the most critical frontier we have. We are building agents that live on chain and transact autonomously. But where does the "brain" of that agent live? A modern AI model consists of gigabytes, sometimes terabytes, of weights and training data. You cannot put that on Sui. You cannot put it on Ethereum. If you put it on a centralized server, the agent is no longer autonomous; it is tethered to a corporate leash.

Walrus provides the missing piece of this puzzle. It allows the AI model to be stored as a blob on a decentralized network, verified and paid for on chain, but physically distributed across thousands of independent nodes. The "Red Stuff" encoding ensures that even if a government crackdown or a natural disaster takes out half the network, the model can still be reconstructed and the agent can continue to function. The logic lives on Sui, the memory lives on Walrus, and the two function in perfect symbiosis.

This separation of concerns is the quiet genius of the protocol. Walrus does not try to do everything. It does not try to be a general purpose computation layer. It leaves the consensus, the payments, and the identity management to the Sui blockchain. This allows Walrus to be incredibly lightweight and focused. It acts as a specialized utility, a "hard drive" that plugs into the "CPU" of Sui. This modularity means that Walrus inherits the speed and security of Sui without bloating it. It also means that developers can build applications that feel like Web2 snappy, rich media, massive data depth while retaining the trustless nature of Web3.

The implications of this shift are profound for the concept of the "decentralized frontend." Right now, if a DeFi protocol is targeted by regulators, the team is often forced to take down the website. The protocol still runs on chain, but no one can use it because the interface is gone. Walrus enables the hosting of the frontend code itself as a decentralized blob. A "Walrus Site" is not served from a single server; it is reconstructed on the fly from the decentralized network. It cannot be taken down by a subpoena sent to a cloud provider because there is no cloud provider. There is only the protocol.

We are also seeing this transform the concept of digital history. As blockchains age, their history grows massive. Storing the entire transaction history of a high speed chain like Sui becomes a burden for validators. Walrus acts as the archival layer, allowing the active validators to stay lean while the historical data is offloaded to the storage network, still accessible and verifiable but no longer clogging the active state. This ensures that the blockchain can remain fast and cheap for decades, rather than collapsing under its own weight after a few years of high activity.

The economic model backing this infrastructure is equally important. The WAL token is not a governance token searching for a purpose; it is a resource bond. To store data, you pay in WAL. To run a node and earn rewards, you stake WAL. The token represents the right to utilize the network's capacity. This creates a direct correlation between the usage of the network and the value of the token. As more AI agents, social networks, and archival projects dump data into Walrus, the demand for the storage resource increases. It moves the industry away from speculation and toward utility based economics, where the token price is a reflection of the network's actual GDP the Gross Domestic Persistence.

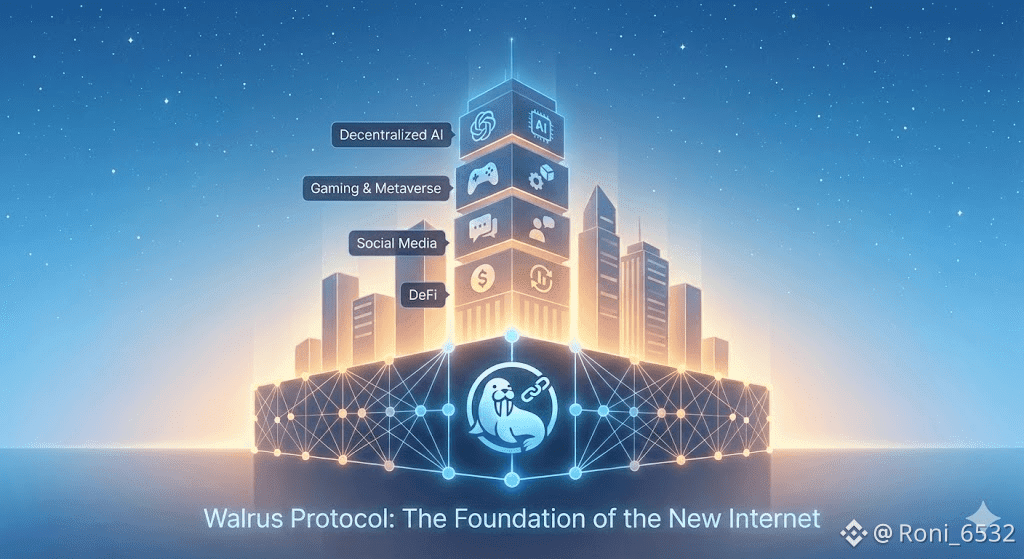

This shift toward specialized infrastructure is the sign of a maturing industry. In the early days, we tried to make one blockchain do everything. We wanted it to be the database, the computer, the bank, and the judge. We realized that this monolithic approach leads to congestion and high fees. The future is modular. It is composed of specialized layers doing one thing perfectly. Walrus is the storage layer. It is the boring, invisible, industrial grade concrete foundation upon which the skyscrapers of the next generation internet will be built.

Most users will never directly interact with the Walrus protocol. They will never command line a blob upload or calculate the erasure coding redundancy of their family photos. They will simply notice that their decentralized social network loads instantly. They will notice that their favorite game has rich, high definition assets that never disappear. They will notice that the AI agent they hired is still working five years later, even though the company that built it went bankrupt. They will enjoy the benefits of a truly persistent internet without ever knowing the name of the machinery keeping it alive.

This invisibility is the ultimate goal. Infrastructure is only successful when it becomes boring. We do not think about the TCP/IP protocols that carry our emails, or the transoceanic cables that carry our voice calls. We just trust that they work. For too long, crypto infrastructure has been loud, expensive, and fragile. We have been playing with toys. With the advent of protocols like Walrus, we are finally building the tools necessary for a civilization grade digital economy.

We are moving from the era of "on chain" signaling to the era of "in network" reality. The distinction is subtle but absolute. "On chain" implies a limit, a constraint of the ledger. "In network" implies a boundless capacity, distributed and resilient. It is the difference between writing a message in a notebook and broadcasting it on a frequency that can never be jammed.

If we want this ecosystem to survive the next decade, we must stop pretending that cloud storage is a sufficient solution for decentralized problems. We must acknowledge that centralization is a systemic risk that grows with every byte of data we offload to Amazon or Google. We have the technology to fix this. We have the math in "Red Stuff" to make it efficient. We have the coordination layer in Sui to make it seamless. All that remains is for us to internalize the shift and start building as if permanence actually matters.

The next time you interact with a decentralized application, ask yourself where the data actually lives. If the answer is "the cloud," then you are not using a decentralized application; you are using a hybrid monster with a single point of failure. The transition to true decentralization requires us to embrace the invisible, heavy lifting of storage protocols. It is not the flashy narrative that drives bull markets, but it is the structural integrity that ensures we are still here when the market turns.

I may never use this directly, but the ecosystem cannot scale responsibly without it.